Use 16GB SSD for Swap on Amazon Linux c3.large Instance

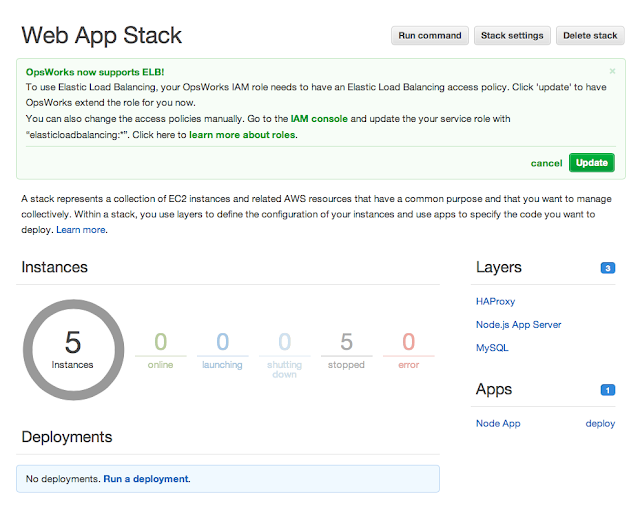

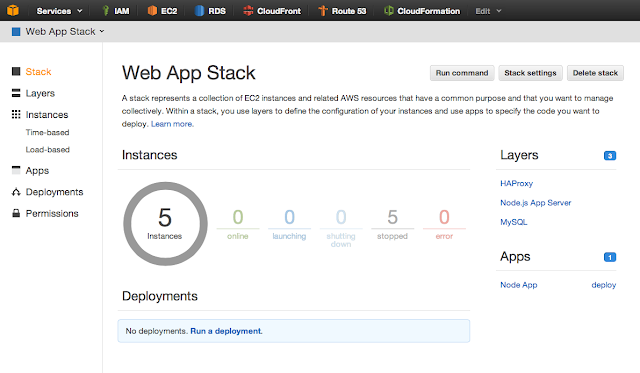

Amazon announced new generation C3 instance types , which are compute optimized instances, available in 5 sizes: c3.large, c3.xlarge, c3.2xlarge, c3.4xlarge and c3.8xlarge with 2, 4, 8, 16 and 32 vCPUs respectively. C3 instances will provide you with the highest performance processors and the lowest price/compute performance compared to all other Amazon EC2 instances. C3 instances also feature Enhanced Networking and SSD-based instance storage . For C3 Instances, each vCPU is a hardware hyperthread from 2.8 GHz Intel Xeon E5-2680v2 (Ivy Bridge) processors. Setting up c3.large instance with SSDs When setting up instance, make sure you add both Instance Store volumes. Its up to you how you like to set up your root storage, For this example I opted for 16GB, 480 provisioned IOPS (you need to maintain 30:1 ratio): Testing the SSD speed Once set up and server launched, we can use 2nd 16GB SSD (mounted on /dev/sdc) for swap on new Amazon Linux instance c3.large # Test SS